With the Bonse Hart instrument out of commission still (yet another failure in the X-ray generator target assembly), I decided it may be time to have another look at the data correction procedures. Some of you may remember that I wrote a comprehensive review of all conceivable corrections in a recent open-access review paper, and while I implemented some of the corrections mentioned therein, it might be time to implement most of them.

I have programmed data correction procedures about three times now, each implementing lessons learnt from previous implementations. The first was written in Matlab, and had a nice GUI front-end allowing for the processing of multiple samples. After switching to Python (due to untenable Matlab licenses), this needed to be rewritten. Thus, the “imp” was born, short for: “the impossible project”. This required all measurements to come with a text-file containing the correction parameters. When such text files were made, a daemon would spring into action and try to perform the corrections.

After a while I decided I wanted something more database-y again, and so I started the sasHDF project. Here, a single text file is used as a configuration file for base settings, and a second file would be used containing sample information. All the samples would be stored in a single HDF5 file, complete with supplementary information. While this seemed to work fine, it was a little frightening to use as it introduced a single point of failure: the HDF5 file containing everything. It also had only a subset of corrections as we were working with a very nice detector back then.

In a few months, I will get the chance to work with an instrument again. This one, however, is an older instrument which will be adapted to work with molybdenum radiation. As such, it still has one of the older wire detectors which are a bit more challenging to correct. Additionally, since starting to program with *real* programmers (on the McSAS and fabIO projects), I feel I have learned a bit more about proper programming styles. Combined with the provision of a modicum of time, I started work on the next data correction program, tentatively called “imp2”.

As the name suggests, this variant has an approach that is more similar to the “imp” system: every datafile has a configuration file containing all the required information for data reduction.

Changes and improvements over the previous imp will be:

- completely rewritten codebase (and hosted on a git server) on a GPLv3 license

- offloading the data read-in procedure to “fabio” from Jerome Kieffer & co.

- offloading the configuration file read-in procedure to ConfigParser or JSON

- offloading the integration procedure to the PyFAI project’s procedure

- Using the “logging” package for the logging procedures

- adding an image distortion correction procedure (perhaps using that from PyFAI)

- data storage in SAS2012-compatible HDF5 files (2D) and ascii (1D) files

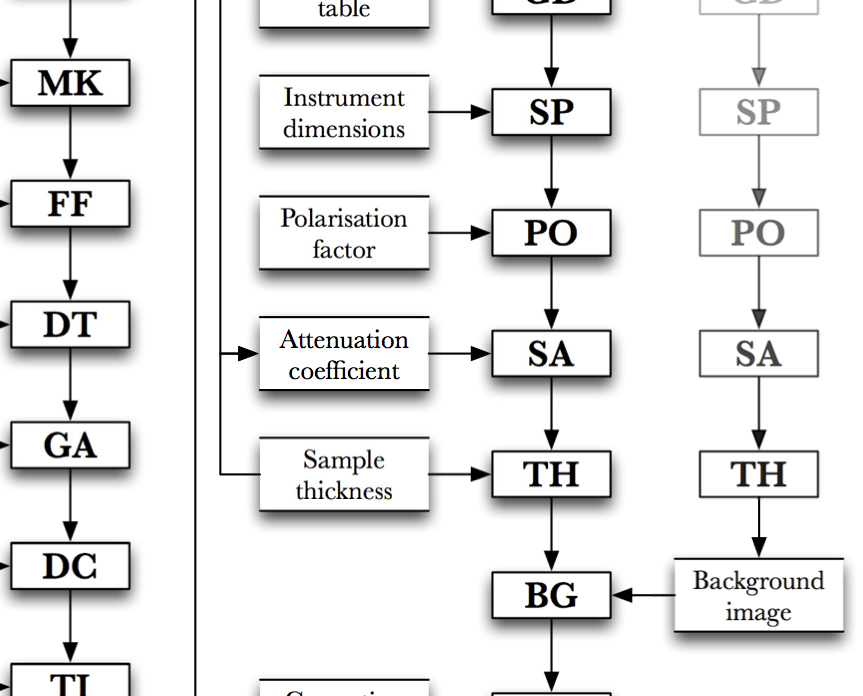

- correction steps separated and implemented independently as defined in the review paper

While the program is in its infancy (more precisely in its prenatal stage), the structure is gradually becoming clearer as more base classes are taking shape. Naturally, collaborations on the programming and design aspect are very welcome. Check out the git repository for more details!

Leave a Reply