So with our MOUSE instrument churning through an unprecedented number of samples, we’ve got to keep it well fed, preferably with consistent sample series. That’s why we started looking into robotics a bit over a year ago in a bid to help us prepare large systematic series of samples, and to increase our in-situ options. Here’s how we built it up, and what the first project on the RoWaN (Robot Without a Name) platform looked like.

Lab automation is nothing very new, why do we not just buy a Chemspeed and be done with it? I am not a fan of that as it would be very expensive and very restrictive. If you buy a finished product, you are destined to use it within the confines of the pre-imagined usage scenarios (those are the ones the software allows for). In other words you will likely never use such machines for anything beyond what the manufacturer intended. If you want to innovate, you have to be able to open things up, rearrange, adapt and reimagine. Same as what we did with the MOUSE.

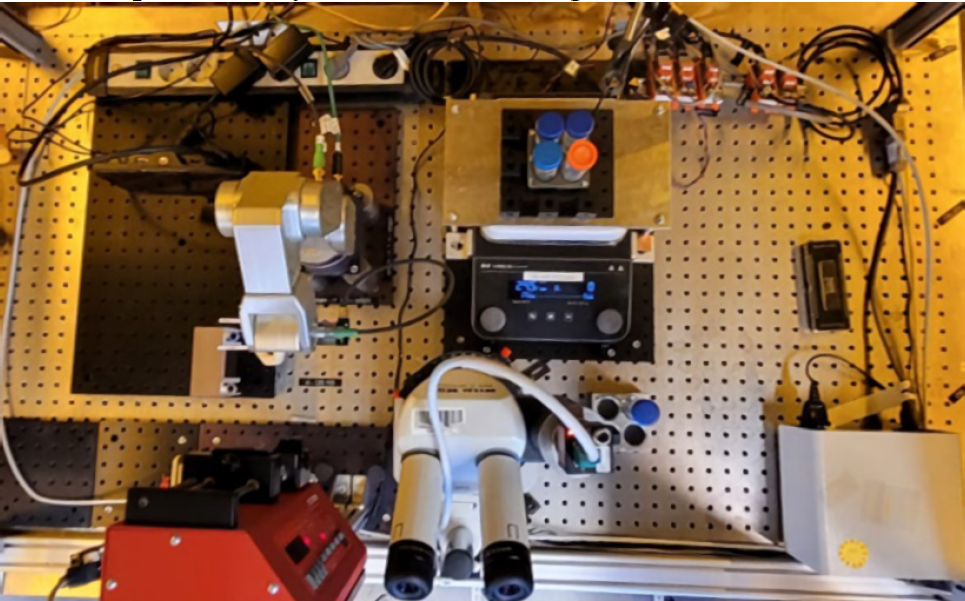

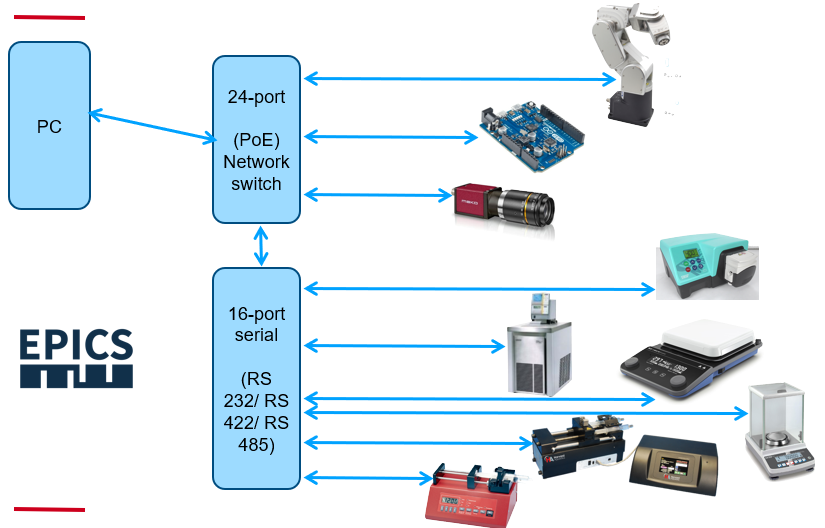

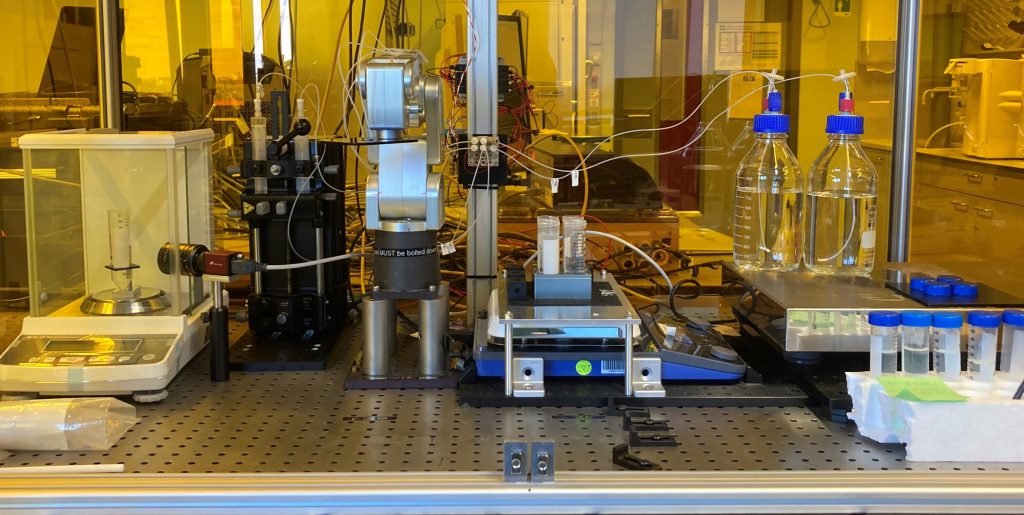

So like any project, this one started with me buying a lot of potentially useful stuff. Since we had already considered automating as much as we could in the MOUSE, any piece of additional hardware we bought such as hotplates, syringe injectors or balances, had to have some sort of serial (RS232/422/485) or ethernet interface. USB interfaces alone were not sufficient. Lastly, we bought a shiny robot arm, the Mecademic Meca500, which, as it turns out, is a super nice arm to work with. It has good open source software libraries, a high position repeatability (within 5 microns), and while it is small, it is very precise and capable and thus a good entry into the world of six-axis robot arms. Added to this was a borrowed optical table, some networking components and other bits and bobs, and we were good to go for the first project. Many thanks to my colleagues Dr. Özlem Özcan, Prof. Franziska Emmerling, and Prof. Heinz Sturm for funding and support of this initial conceptual start-up stage.

We were joined by two students, firstly the student assistant Julius Frontzek, and secondly the Master’s project student Bofeng Du. Together, we set out to turn the eclectic smattering of laboratory hardware into a functional robot.

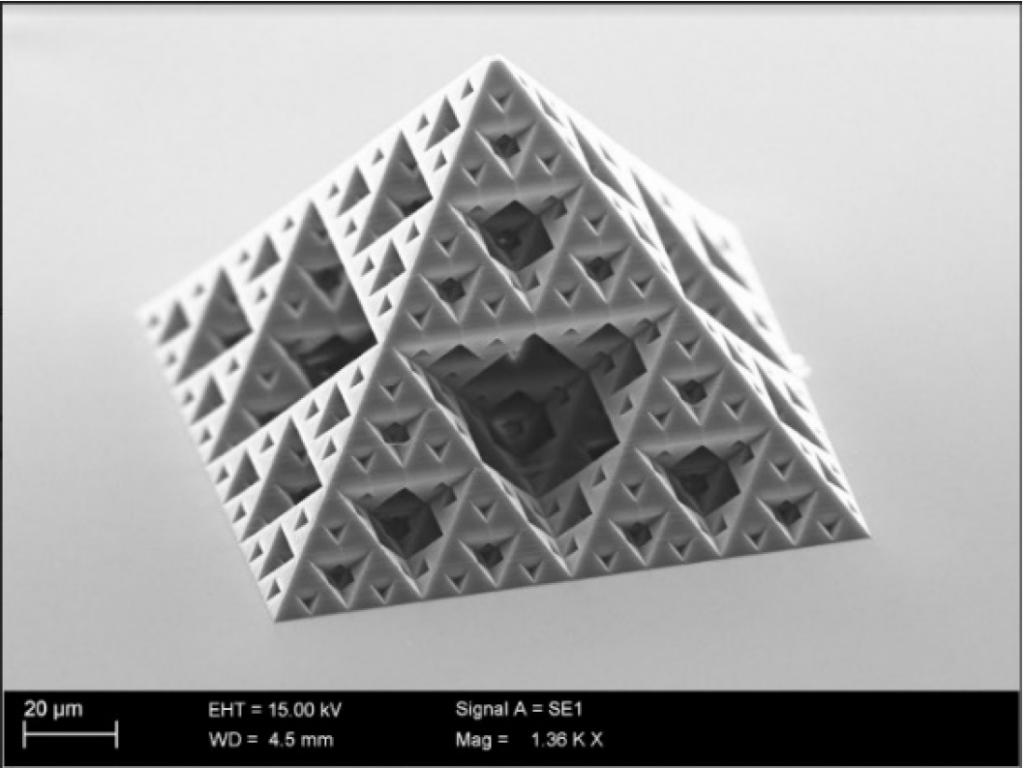

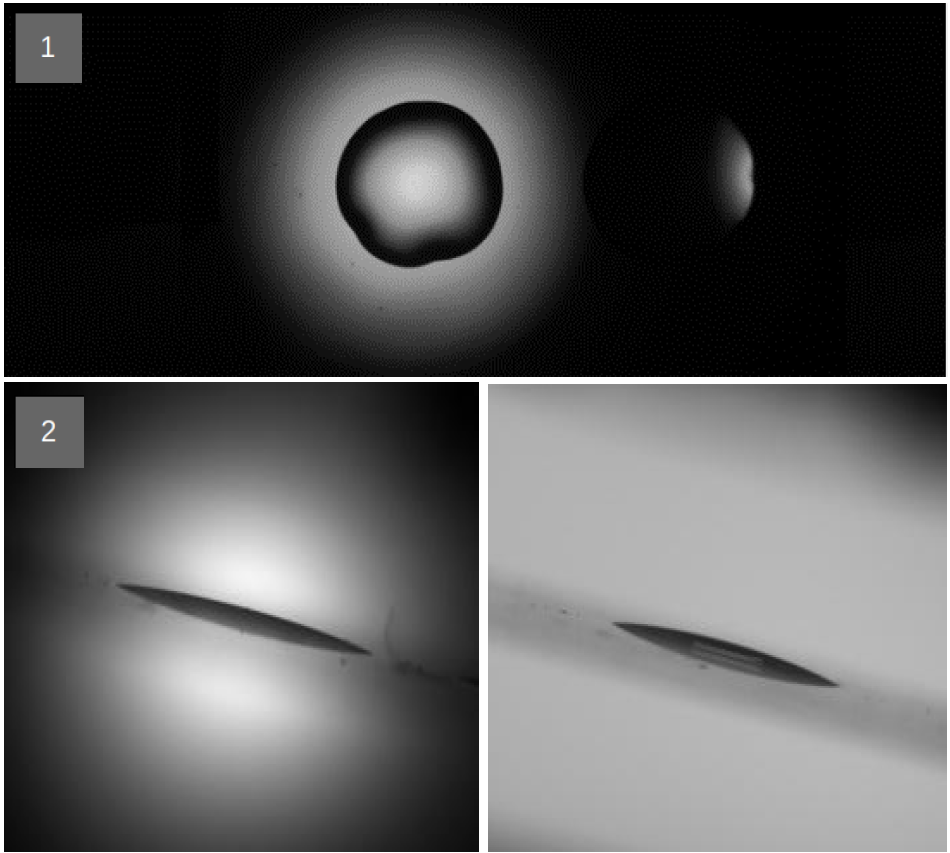

The Master’s project set out to use RoWaN to produce consistent photopolymer resin drops onto glass slides. These consistent drops can be used in our multi-photon laser structuring (MPLS a.k.a. two-photon polymerization, or 2PP) system to write micron-sized 3D polymer structures. More on that particular avenue of research in a future post. Suffice to say that the starting material for this fancy 3D printer is a drop of resin on a glass slide. The more consistent the drop, the more consistent the result.

In brief, we then need the glass slide to be handled by the robot arm, taking it past the stations where:

- the slides are picked up from a rack,

- the drops are placed onto the glass by a syringe injector,

- the glass with drops can be baked onto a hotplate,

- a top-down microscope image can be obtained to get an idea about drop roundness,

- a side-on microscope image can be obtained to get an idea about the drop profile, and

- the finished slide is put back onto a rack

For scripting the robot, I decided on the Jupyter Notebook format; it allows for notes alongside the control code, and it can display and log output images and values coming back from the robot. In that sense, a notebook can act like a recipe, which gets filled with experimental details during a run and can be stored as a self-describing experiment log.

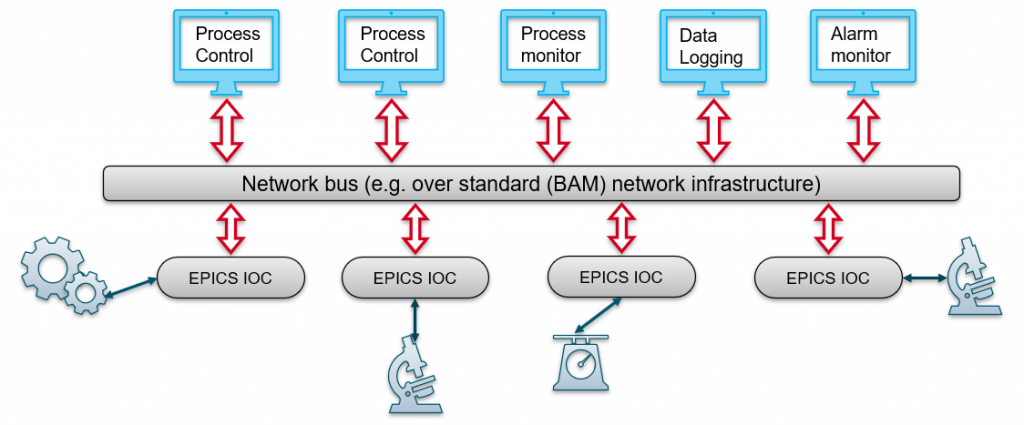

For control of the robot and its paraphernalia, I explored several options. While it is in principle possible to directly address each piece of hardware using serial calls in the jupyter notebook, I wanted a more consistent, but also asynchronous interface that would allow me to relegate hardware control to background processes. After asking several experienced people for advice on this (including Dr. Mark Kozdras and his colleagues who are establishing robotics platforms worldwide, but also my contacts at LBL), I decided to go for the well-established, open-source EPICS control system. This is a control system that is often used to run large facilities and their beamlines, and so would be more than capable of running this little robot. An additional benefit was that any hardware that would be controlled this way could also be used directly on the MOUSE or at the synchrotron if we needed it. EPICS seems complex in the beginning, but we were greatly helped by the caproto Python library, which makes installing EPICS a one-line command, and allows us to program individual hardware IOCs in a day or two. Depending on the configuration, EPICS IOCs can be read and controlled by multiple endpoints, allowing for remote monitoring, logging, alarms and emergency operation, independent of the controlling computer.

Overkill? Absolutely, but that just means we won’t be so limited realizing future stages. On the hardware side, the backend consists of a powerful Linux computer, a Netgear PoE network switch, and a 16-port MOXA network-to-serial converter, to which everything is connected. Future upgrades will include replacing the powerful linux computer with a linux laptop, to make everything a bit more portable for synchrotron experiments, since the whole robotics hardware is connected to the control computer over a single ethernet connection anyway. Now that it’s set up, adding new pieces of hardware is easy and interoperable. We also got helped by Emilio Perez Juarez from Diamond Light Source who provided several excellent IOCs they already had written. So far, we have the following pieces ready to be used, with several more in the works:

- A Kern balance (max. 120g)

- Two Allied Vision “Mako” PoE scientific camera modules

- A Lauda heating/cooling bath (with external liquid circulation option)

- An IKA hotplate/stirrer

- A Watson-Marlow peristaltic pump

- An Arduino with environmental sensors and four solid-state relays connected to four liquid 2/3-way valves

- A Harvard Syringe pump (heavy duty)

- Two Aladdin syringe pumps

- two Trinamics 6-axis stepper motor control boards

- The Meca500 robot arm

- Omron PID controllers

With all those bits and bobs, keeping track of the physical world locations of all these parts in an integrated robotics system is another challenge, one which is not covered by the existing open-source technologies to date. If you know where each object is in a 3D digital twin of your system, you may be able to avoid accidentally crashing your robot arm into them. Tracking the object locations also means tracking object inheritance, for example when you put your robot arm on a track, the position of the arm in the robot platform space is dependent on the arm position on the track. Likewise, if your sample carrier is picked up by the robot arm, the location and rotation of several objects are changed depending on the motion of that arm.

To that end, Julius Frontzek and I have been working on a software digital twin for RoWaN (or, indeed, any robotic platform) that can 1) keep a digital representation of the real-world placement of items, and store this for traceability and 2) do basic path planning when fed with information like “move slide 1 to the hotplate”. This is close to a beta-release now, more information to follow on that too. For the time being, we program the positions and movements by hand.

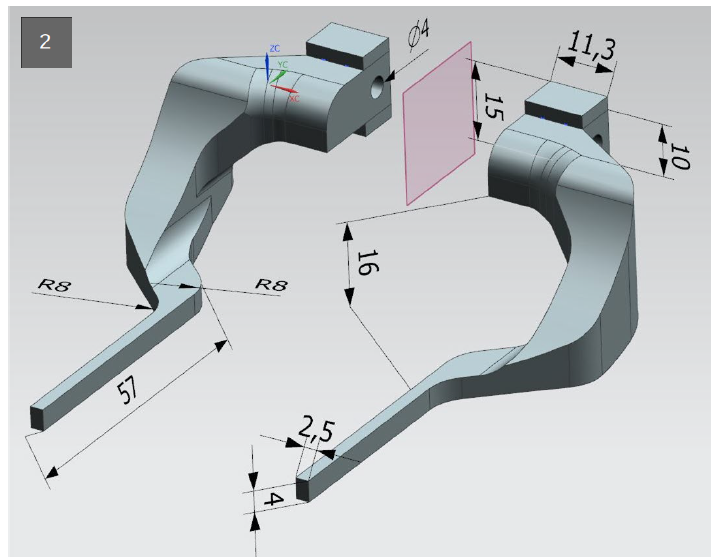

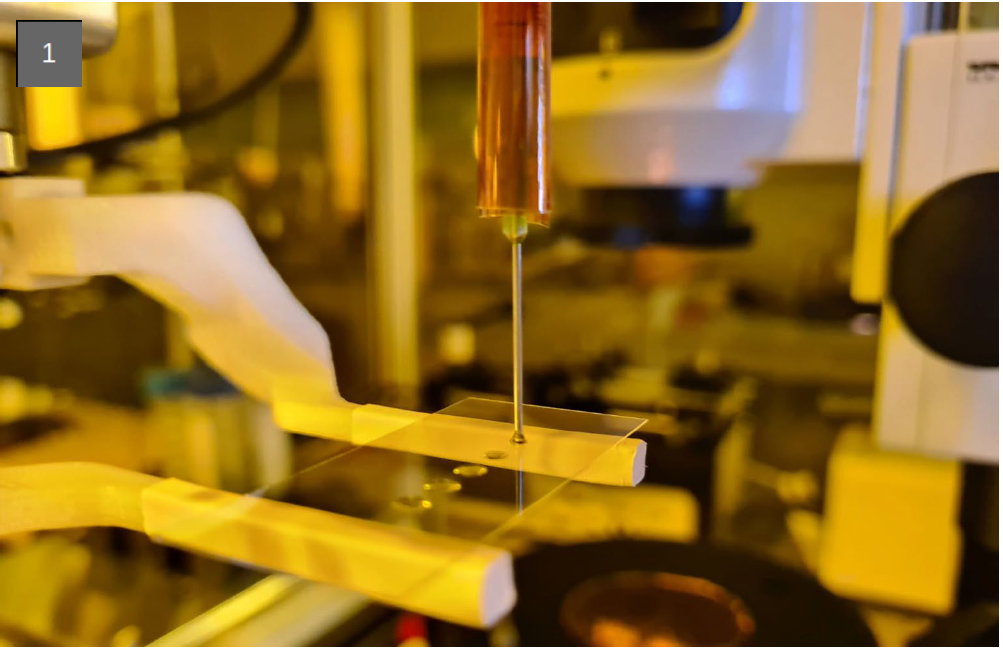

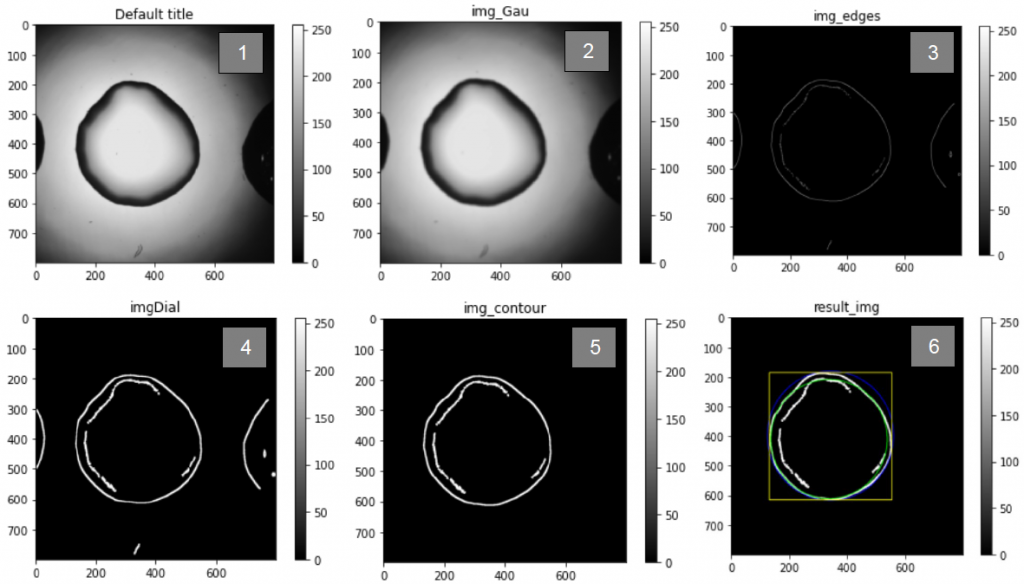

After overcoming many challenges in the integration stage (3D printed sample racks and robot grippers that needed regular improvement and replacement as they break when accidentally moving wrong), Bofeng ended up with a nice path that followed all the required steps. As a bonus, he also included image analysis of the microscope images to get measures of the drop roundness and profile. This would enable us to start optimizing the drop shapes and reproducibility as we now have a good way of evaluating the means and spreads of the drop morphological parameters. A video of the whole process can be seen higher up in this blog post, or by following this link: RoWaN (Robot Without a Name) preparing rows of drops of photopolymer on slides. – YouTube.

In the end, the demand for these baked-drops-on-slides from the group working on the MPLS system wasn’t as high as I initially expected, so we didn’t get to use this RoWaN configuration much yet. However, the configuration is documented and stored for when we need it. For now, we’ve started rearranging the RoWaN system for another synthesis project, and will soon modify it even further to include the rails under the robot arm, extending its reach dramatically. We’re keeping the 5 micron positioning reproducibility by feeding the actual rail position (as read out by an interferometer strip on the rails) forward into to the offset of the robot arm position. A similar error compensation is done by the MPLS 3D printing system when steering the laser beam – more on that later – so there are real-world examples of this already.

For us, this RoWaN platform is a way of experimenting with building and reconfiguring automated sample preparation platforms. It provides us with plenty of learning opportunities, and, once it is configured right, also a whole heapload of samples which we can then keep the MOUSE fed with. The extra experience with EPICS furthermore comes in handy during our MOUSE upgrade projects and with our interactions with the syncrotron staff (synchrotronians). The more experience we get, the better we can imagine future projects and expansions. And, as long as we have enough people to turn these ideas into practice, the future will be full of systematically produced sample series for more thoroughly supported insights.

Leave a Reply